GestureBot

A framework for integrating gesture generation models into interactive conversational agents.

Embodied conversational agents (ECAs) benefit from non-verbal behavior for natural and efficient interaction with users. Gesticulation – hand and arm movements accompanying speech – is an essential part of non-verbal behavior. Gesture generation models have been developed for several decades: starting with rule-based and ending with mainly data-driven methods. To date, recent end-to-end gesture generation methods have not been evaluated in a real-time interaction with users. We propose a proof-of-concept framework to facilitate the evaluation of modern gesture generation models in interaction.

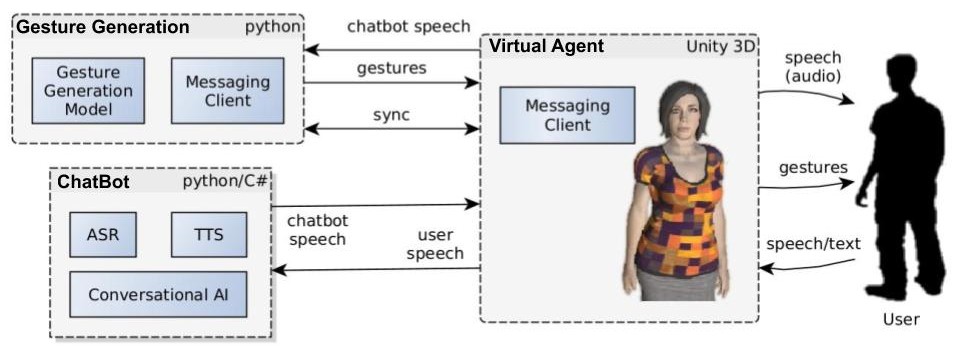

Our framework contains three components: 1) a 3D interactive agent; 2) a chatbot backend; 3) a gesticulating system. Each component can be replaced, making the proposed framework applicable for investigating the effect of different gesturing models in real-time interactions with different communication modalities, chatbot backends, or different agent appearances.

The implementation of the system with two runnable examples is available in this github repository.